Innovating with machine learning at Seattle-Tacoma Airport

- Like

- Digg

- Del

- Tumblr

- VKontakte

- Buffer

- Love This

- Odnoklassniki

- Meneame

- Blogger

- Amazon

- Yahoo Mail

- Gmail

- AOL

- Newsvine

- HackerNews

- Evernote

- MySpace

- Mail.ru

- Viadeo

- Line

- Comments

- Yummly

- SMS

- Viber

- Telegram

- Subscribe

- Skype

- Facebook Messenger

- Kakao

- LiveJournal

- Yammer

- Edgar

- Fintel

- Mix

- Instapaper

- Copy Link

Posted: 6 July 2020 | Seattle-Tacoma International Airport | No comments yet

Innovation is on the doorstep with the opportunities of artificial intelligence (AI) and machine learning (ML) at airports, Seattle-Tacoma Airport details.

Seattle-Tacoma International Airport (SEA) is working on a wide range of practical applications to improve the passenger experience and operational efficiencies; from escalator safety to tracking garage vehicle activity, wildlife, roadway congestion and detailed aircraft operations on the ramp.

Classification of aircraft at parking stands

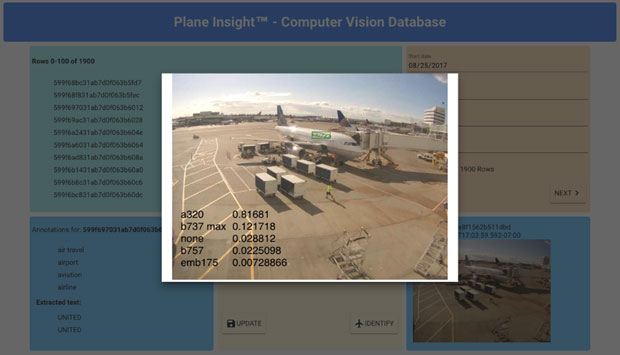

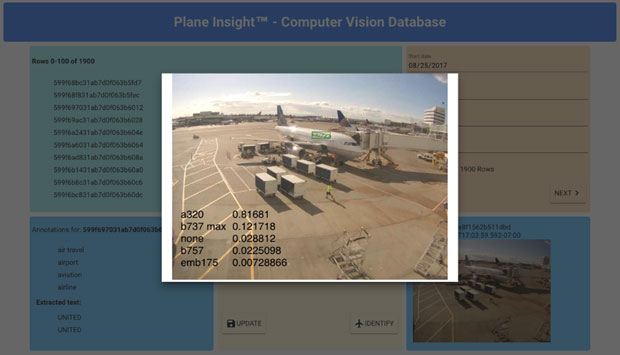

One particular learning and efficiency opportunity is at aircraft parking stands. We began experimenting with an image classification model for determining the type of aircraft at a parking stand. The goal was to test whether it could reliably determine the occupancy at the aircraft parking stand of a gate using our existing security camera feeds. The output of such a system could be used for real-time updates on the Airport Operations Database (AODB) for scheduling purposes and collected for usage trends, revenue verification and the like.

We used two adjacent gates for this prototype. We began collecting snapshots of images at those gates for several months to create the training dataset. The dataset also included external images, from public domain sources, of aircraft types we were interested in. These images were manually categorised by the type of aircraft in the image, with a special classification for an empty gate.

We used an existing Convolutional Neural Network (CNN) from Google for this classification process. Convolutions refer to digital filters that are mathematically convolved with digital images to extract the features hidden in them. A technique known as Transfer Learning was used to specialise the network to the types of aircraft we were likely to have at those gates.

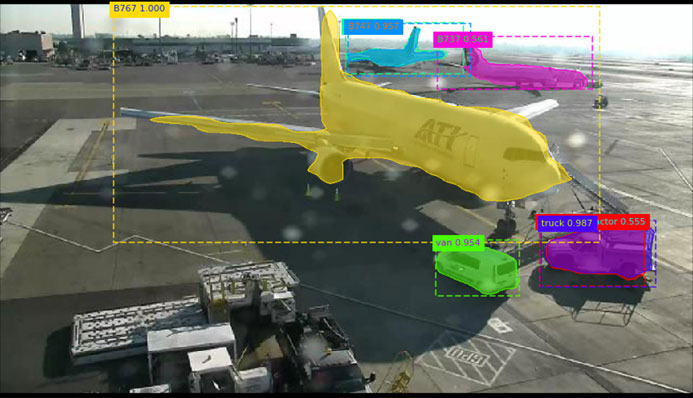

Figure 1: Plane insight – aircraft classification prototype

We deployed and showed the model to work well, making aircraft type predictions in a few seconds on commodity multicore CPUs. The success of this prototype led to the pilot project for air cargo operations in 2017.

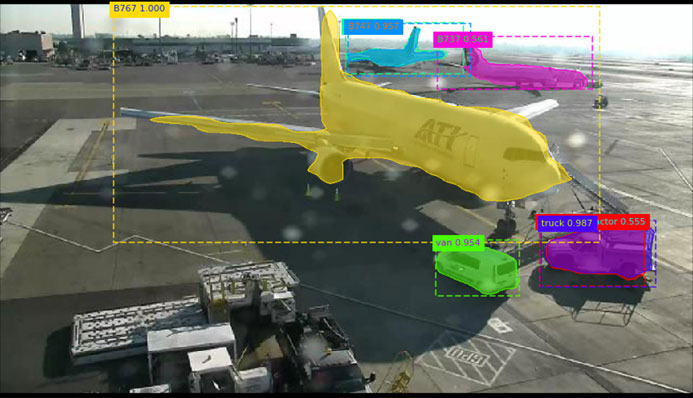

Air cargo equipment identification at parking stands

After several reviews and ideation sessions with our Air Cargo team, it became obvious that a simple classification model was inadequate for the types of information that the team needed. We needed the ability to identify not only the aircraft type, but also the ground equipment at a parking stand from a single image. Additionally, in some locations a single camera is used to view multiple stands. What was needed was an Object Detection and Segmentation neural network. These models can detect multiple, potentially overlapping, objects in the same image and provide their types (labels), relative position and size in the image (bounding box) and a general outline of the object (mask).

Continuous recording of these detections provides the raw data to perform statistical analysis of planned versus actual schedule, inventory of equipment and real-time alerting to unusual or dangerous situations. For example, if a ladder or ground power unit is in the middle of a parking stand and an aircraft is expected to arrive soon, an alter may be issued. The raw data also enables us to build higher-level neural networks that operate on this data to determine the phase of operation at a parking stand (for example, unoccupied, preparing for arrival, unloading, at the gate, loading, preparing for departure).

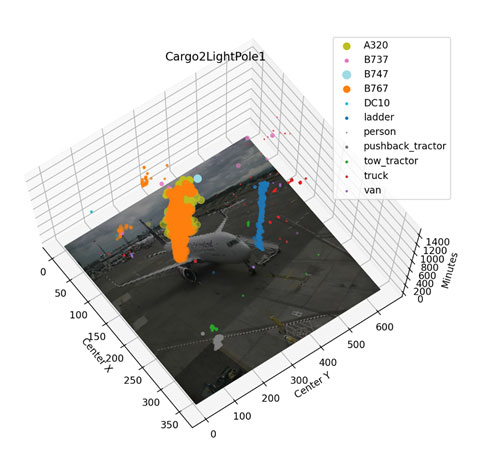

Figure 2: Plane insight – object detection and segmentation

We started with the best Deep Neural Network (DNN) model available at the time. This is an open source project that is fully described in published papers by Microsoft Research and Facebook AI Research. After identifying which cameras to use, we began the long process of collecting a large set of images for a training dataset and the tedious process of annotating all the images, some with the help of our summer interns working on ML. The success with the results led to a pilot deployment that has been in operation for over a year.

Passenger processing

Figure 3: Detected objects and their locations over 24 hours

One of the earliest ideas for using ML at SEA was for a conversational wayfinding bot. This was also the first attempt at using Natural Language Processing (NLP). The basic idea is to provide an application that a passenger could speak to, either on her mobile device or a kiosk, to ask questions about the airport. Fortunately, most language processing capabilities are available via Application Programming Interfaces (APIs) on devices or in the cloud. Language detection and speech-to-text are usually done on the device itself and sentences are processed by specialised Language Understanding models. Google’s Dialogflow, Microsoft’s LUIS, Amazon Lex and IBM Watson Assistant all allow creation of domain-specific language understanding which can identify the user’s intent. When the knowledge set is small and specialised, for example locations of gates or bathrooms at an airport, training such a model using typical sentences and questions is straightforward and relatively easy.

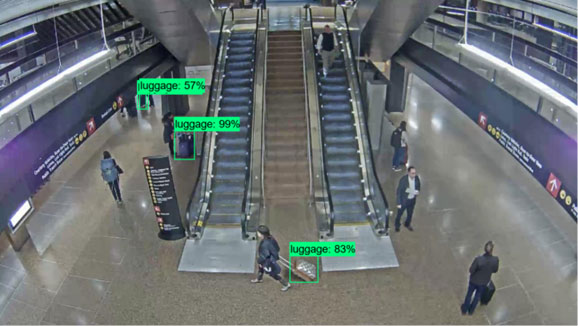

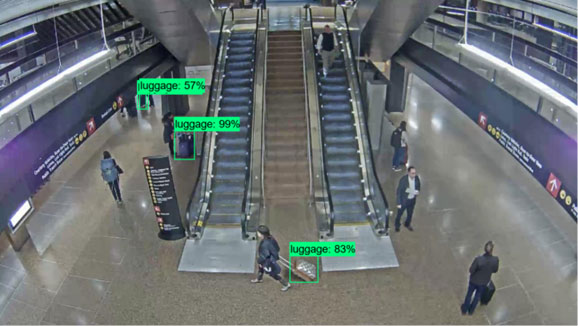

Another area we explored was to use computer vision for passenger safety. In this case, we were asked to check the viability of using object detection to make real-time determination of dangerous objects on escalators. Dangerous objects in this context refer to things like strollers, walkers, wheelchairs and large suitcases. The eventual goal is to provide some sort of warning to passengers approaching an escalator whenever such a dangerous situation is observed. In summer 2019, a group of our interns created a training dataset using security camera snapshots and public domain images. They built and trained an object detection model for the objects mentioned earlier. They were able to show a good degree of accuracy.

Figure 4: Escalator safety intern project

Other areas of exploration

As the knowledge of ML capabilities grows across our organisation, we have seen an increased number of more compelling solution ideas from all quarters. Some of these include:

- Garage – use of DNN-based License Plate Recognition (LPR) and Character Recognition (OCR) to keep track of parking spaces and for automatic calculation of fees for cars, taxis and TNC vehicles

- Roadway access – use of computer vision for traffic measurement and alerting of congestion situations

- Operations – use of object detection and QR codes attached to equipment for tracking and inventory

- Operations – use of OCR for detecting, tracking airlines names, tail numbers and company names

- Facilities – use of computer vision to automatically monitor hygiene of trash compactors

- Security – use of computer vision to monitor for potential attacks

- Environmental – use of computer vision to track APU usage while aircraft is at a gate

- Wildlife management – use of ML to create precise classification using radar data

- Operations – use of object recognition for large asset tracking

- Operations and security – use of computer vision for detecting hazardous objects and situations.

Applications of ML for airport operation are nearly limitless. The biggest barrier to adoption is the steep learning curve that is required to get a full understanding of capabilities and tools that are available. With a deeper understanding, it becomes easier to find the nails that only an ML hammer can fix. Fortunately, many capabilities are available through cloud-based APIs which require very little upfront investment. Early experimentation and prototyping can help provide a solid understanding of ML and where it would work best for each organisation.

Related topics

Airport development, Airside operations, New technologies, Passenger experience and seamless travel