Multi-biometrics for enhanced airport access control

- Like

- Digg

- Del

- Tumblr

- VKontakte

- Buffer

- Love This

- Odnoklassniki

- Meneame

- Blogger

- Amazon

- Yahoo Mail

- Gmail

- AOL

- Newsvine

- HackerNews

- Evernote

- MySpace

- Mail.ru

- Viadeo

- Line

- Comments

- Yummly

- SMS

- Viber

- Telegram

- Subscribe

- Skype

- Facebook Messenger

- Kakao

- LiveJournal

- Yammer

- Edgar

- Fintel

- Mix

- Instapaper

- Copy Link

Posted: 30 November 2007 | The Johns Hopkins University Applied Physics Laboratory, Laurel, MD 20723-6099 | No comments yet

Biometrics is used to verify identity in various activities from crime solving, recidivist detection, employee and clearance screening, remains identification and border and airport security. Biometric systems are being deployed in airports in increasing numbers.

Biometrics is used to verify identity in various activities from crime solving, recidivist detection, employee and clearance screening, remains identification and border and airport security. Biometric systems are being deployed in airports in increasing numbers.

Examples include the Registered Traveller services Clear and rtGo, IRIS and miSense in the UK, and US-VISIT and US-Exit programmes. These systems are used to reduce passenger authentication time; but they are also increasingly being recognised as having a role to play in security challenges including anti-terrorism and illegal immigration.

Authentication and identification require that the individual has previously recorded biometrics, such as a fingerprint, iris scan, or voice utterance, which is stored as a template in a database, along with the individual’s name or code. For authentication, the individual gives his/her name or code and a sample of the appropriate biometric(s). The sample is compared with the stored template for that individual. Identification is a more challenging task in which the sample is compared with a gallery or watch list, usually returning a ranked list of candidate matches exceeding a threshold matching score. Automated systems are becoming essential in order to process the high throughput required for airport security applications. According to a recent United States Department of Justice study, 118,000 people, on average, pass through the US VISIT system each day. Of these, 22,350, or about 19%, were subjected to additional inspection and 92% of these were finally admitted to the US1. Over time, the number of enrollees and the number of watch list entries will grow. For example, by 2011 the Border and Immigration Agency intends to check biometrics from all non-European Economic Area non-visa nationals at UK arrival check-points.

Given the significantly longer time required for secondary inspections, compared to the time to collect virtually any single or dual biometric sample, it seems almost axiomatic that in order to handle increased volume, the fraction of secondary inspections must be dramatically reduced. Although more efficient processing can help, the real performance gain is tied to reducing error probabilities. The Intelligence Reform and Terrorism Prevention Act of 2004 directs the Transportation Security Administration (TSA), in consultation with representatives of the aviation industry, biometrics industry and the National Institute of Standards and Technology (NIST) to develop “… comprehensive technical and operational system requirements and performance standards for the use of biometric identifier technology in airport access control systems…”. The performance level required by the TSA includes false accept and reject rates of less than one percent. The TSA was also directed to establish a Qualified Products List (QPL) of vendor technologies and products that meet these requirements. While the first products to qualify have just been named, there has been a relative lack of vendor participation – possibly due to some vendors deciding that their technologies could not meet the requirements2. Although this may be disputed, the sentiment is consistent with a growing recognition that current systems have difficulty attaining the small false match and false non-match rates. Multi-biometrics is a way to lower error rates. By combining multiple samples from the same modality, or from different modalities, performance is enhanced beyond that for each individual modality. The White House’s national strategy for homeland security calls for more advanced “multi-modal biometric recognition capabilities” using fingerprints, iris and the face 1. Another advantage of multi-modal systems is the increase in robustness. Some people, for instance, do not have fingerprints that produce adequate images for processing, while others have voice impairments that result in poor speech signatures. Fewer individuals, however, exhibit both conditions. The remainder of this article includes a short tutorial on multi-biometric fusion and a brief analysis demonstrating its potential for improving access control operations.

Multi-biometric Fusion

Multi-biometric techniques are broadly categorised as decision-level, score-level or measurement-level fusion. Decision-level fusion reduces the information content from each measurement to a single bit. Score-level fusion is attractive because it combines scores derived separately from each biometric modality and can be readily adapted for use with existing systems without modifications to their internal algorithms. Thus, its implementation does not involve significant development time and the cost is modest. We focus on score-level fusion. Some simple techniques combine available finger impressions sequentially using decision-level logical (and, or) operations until all individual scores have been used, or an acceptable score confidence is obtained. Others sum the scores (with or without normalisation), or are based on a consensus of experts. More desirable, however, is a mathematical framework that provides a more nearly optimal combination of multiple biometric samples and includes the effect of measurement quality. Dempster-Shafer, support vector machine, and Bayesian fusion techniques, among others, have been developed for this purpose. Bayesian methods are well accepted and perform well under reasonable assumptions about the statistical behavior of the scores.

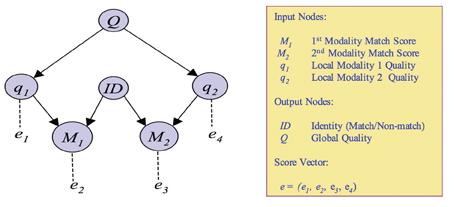

A Bayesian Belief Network (BBN) can be depicted as a graph, where the nodes represent variables and the arcs represent conditional probability relationships between variables. The example in Figure 1 has an input or measurement node and a local quality node for each modality. There are two output nodes corresponding to an identity random variable and a global quality random variable. Scores and quality measurements fed to the input nodes are combined with the conditional probabilities using Bayes rule to update the identity and global quality distributions. The local quality variable measures the quality of each biometric match as it is processed, whereas the global quality variable indicates the overall quality of a multi-biometric match score. The methodology is general and may be adapted to model the specifics of an intended application. It can incorporate any matching algorithm or set of modalities, as long as the probabilistic performance of each matcher can be accurately estimated. Reference 3 is a detailed technical discussion of this BBN and its performance.

Using Quality to Aid Biometric Fusion

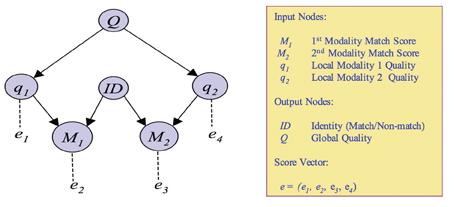

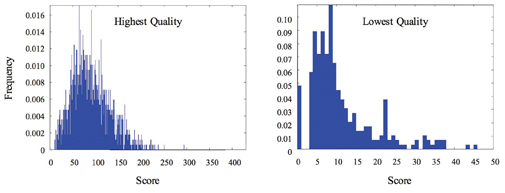

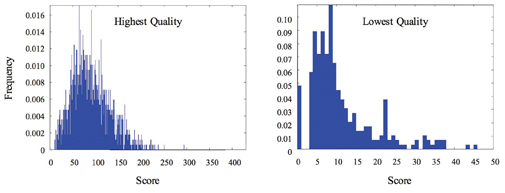

The quality of an identity match depends on the quality of the biometric samples on which it is based. Although each of us has an intuitive notion of quality, the concept must be quantified for automated processing. Ideally a quality metric is designed to be a predictor of matcher performance; therefore, good quality measurements will have lower error rates for false matches and false non-matches than poor quality measurements. Recently, NIST developed a five-level quality metric4 for fingerprints that captures this relationship. Since quality within a single modality may vary from one sample to another, conditioning match and non-match score distributions on the quality metric has a powerful effect on the match score distributions, as Figure 2 shows.

An Example of Fusion Performance

Although this example deals with fingerprint and voice modalities, the methodology applies to any combination. Usually a fingerprint is represented by a set of minutiae points derived from endings and bifurcations of the friction ridges within the print and sometimes the relative angles of the ridge lines at the minutia points are also available. The resulting discrete point-set template is then compared to similarly generated minutiae templates using a point-set matching algorithm designed to account for noise and other distortions due to skin elasticity. The match score is generally a measure of the number of corresponding minutiae pairs. Similarly, a speech segment can be represented by a set of cepstral coefficients 5 derived from its frequency structure, and a match score is calculated based on the similarity between coefficient sets.

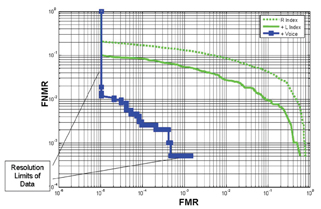

Multiple fingerprint processing was tested using data from NIST Special Database 14. The score probability densities, conditioned on ID = {Match, Not Match} and NIST quality levels, were estimated from a subset of the data and the remaining subset was used for testing. Bimodal fusion was tested by including voice data derived from the XM2VTS [6] database and combined with the fingerprint data to create virtual individuals. Since programmes such as US-VISIT IDENT combine matches from two index fingers, we show results obtained by combining index fingers in Figure 3 that clearly demonstrate the benefit of fusion. Moreover, subsequent fusion with voice data shows even lower error rates. The voice data assists correct decision making, especially when the fingerprint quality is low, because it is an independent measurement. When we ran the test a second time with neutral quality for each fingerprint and voice utterance, regardless of its actual quality value, the fusion benefit was found to be significantly reduced.

Benefits of Fusion

Authentication and identification are primary functions in airport access control. Figure 3 shows two metrics for authentication: the False Match Rate (FMR), allowing an impostor to successfully pass as a valid passenger and False Non-Match Rate, denying a valid passenger access. For an identification system, the relevant metrics are the Probability of Miss (PMiss), where an individual on a watch list is not identified by the system and the Probability of False Match (PFM), which is approximately the FMR times the number of entries in the watch list. Here we examine some implications of multi-biometric fusion for authentication.

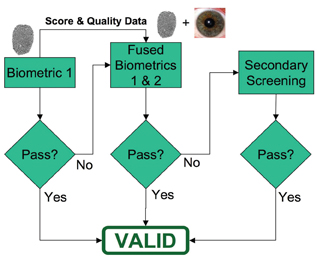

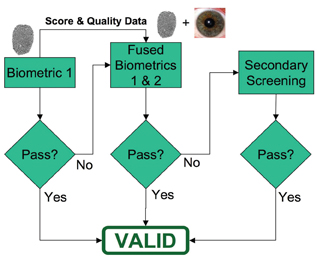

For authentication, the decision threshold is usually set to provide the required false match rate to maintain security while minimising false non-matches (i.e. erroneous referrals to secondary screening). US-VISIT currently requires finger and face modalities be taken at all points. Higher throughputs may be obtainable under a sequential system that allows some, perhaps most, individuals to proceed after a single modality (See Figure 4 on page 66). Ideally, of course, one would like a fast, high-accuracy modality to be the first modality in the chain, but this is not always possible. One may have a fast method, such as fingerprints as the first biometric, with a threshold to meet the required PFM value. If this threshold is not met, a higher accuracy, but slower modality can be taken, such as iris scanning. Given the immense difference between the biometric sampling times of even slow modalities vs. the very long secondary screening times, it is worth a longer acquisition time for accuracy at this point.

Also, this is an appropriate point to consider combining the results of both biometrics to provide the most accurate score possible, rather than discarding the first modality when considering the second. Even in systems where all modalities are gathered at every check-point, combining the modalities effectively should result in fewer erroneous referrals to secondary screening.

Based on the performance curves in Figure 3, given a 0.1% FMR (probability of impostor passage), the FNMR (probability of failing to match a valid passenger) goes from 5.5% for two-fingers alone to 0.05% for two-fingers plus voice, a 100-fold reduction. Thus, at a checkpoint processing 10,000 passengers daily, this translates into a reduction from 550 secondary screenings to 5. Note that Figure 3 is derived from laboratory studies, not field data and merely indicates possible gains due to quality-dependent multi-biometric fusion. In the field, ancillary errors such as statistical differences between algorithm training data and field data, clerical errors, equipment misuse, failures to enroll, etc. will cause additional failures to match.

Summary

Multi-biometric fusion techniques perform much better than current single-mode systems. This performance improvement should translate to a reduction in the number of secondary inspections and consequently reduce the expected per-person delay, even though the time to process multiple biometrics may be longer than for a single biometric. Of course there are other important issues – not addressed here – that must be considered when thinking about implementing multi-biometrics. System integration, data acquisition and storage, privacy, etc. have costs and must be factored into detailed trade studies in order to definitively quantify the benefit of multi-biometrics to the airport industry.

Figure 1

Figure 2

Figure 3

Figure 4

References

- Debate over usefulness, pitfalls of biometrics intensifies, Homeland Security Daily Wire, 3(185) 16 Oct. 2007

- TSA approves four products for airport screening, Government Computer News, 092407

- D. E. Maurer, and J. P. Baker, Fusing Multimodal Biometrics with Quality Estimates via a Bayesian Belief Network, Pattern Recognition 41 (2008) 821-832

- E. Tabassi, C. Wilson, C. Watson, Fingerprint Image Quality, Technical Report 7151, 2004. (Appendices for NISTIR 7151 can be found at http://fingerprint.nist.gov/NFIS.)

- N. Poh and S. Bengio. Database, Protocol and Tools for Evaluating Score-Level Fusion Algorithms in Biometric Authentication. Research Report 04-44, IDIAP, Martigny, Switzerland, 2004.

- K. Messer, J. Matas, J. Kittler, J. Luettin, and G. Maitre, XM2VTSdb: The Extended M2VTS Database, in Proceedings 2nd Conferewnce on Audio and Video-based Biometric Personal Identification (AVBPA), Springer-Verlag, New York, 1999

About the author

Donald Maurer is a member of the Principal Professional Staff and holds a B. A. from the University of Colorado and a Ph. D. from the California Institute of Technology. Recently Dr. Maurer has been adapting algorithms originally developed for ballistic missile threat discrimination to biometric identification. He is a member of the American Mathematical Society, the New York Academy of Sciences, and the Society of the Sigma Xi.

John Baker

John Baker is a member of the Principal Professional Staff of the Johns Hopkins University (JHU) Applied Physics Laboratory. He currently works in the Navy Aegis Ballistic Missile Defense (Aegis BMD) program, focusing on missile engineering and data fusion. Some data fusion concepts explored in BMD have broad application, including the area multi-modal biometric data fusion. He holds undergraduate degrees in computer science and electrical engineering from Washington University in St. Louis and a Master’s degree in electrical engineering from JHU.